How to ensure AI solutions go beyond the hammer and nails approach

I recently attended a fascinating TechUK roundtable on AI. It was a really interesting discussion, with many different perspectives, and it did get us all thinking that among the understandable hype, there is a risk of missing something crucial.

The scale of growth of AI tooling is unlike anything I’ve ever seen in my career. That’s not just me saying it. Here’s a slightly more authoritative viewpoint:

“AI had roughly linear progress from 1960s-2010, then exponential 2010-2020s, then started to display ‘compounding exponential’ properties in 2021/22 onwards. In other words, the next few years will yield progress that intuitively feels nuts.”

Jack Clark, the Co-Chair of AI Index at Stanford University.

Along with the technical progress, there is also a compounding exponential growth in the public inquisitiveness in AI. This explosion in interest has been fuelled first by ChatGPT, and now by other publicly available generative AI tools.

It seems to me that with this unprecedented growth, there is an understandable risk of us jumping towards AI solutions and trying to find justifications for their use.

More than hammer and nails

Another quote for you…

“When all you have is a hammer, everything looks like a nail”

Abraham Maslow

A phrase I’ve used for years without realising it is from Abraham Maslow (he of the “Hierarchy of Needs” fame). A rush to adopt AI could actually create additional problems rather than address fundamental needs.

The emergence of these tools is very exciting and offers real potential to accelerate change. But I worry that we run the risk of letting the solutions dictate the approach and obscure the very problems we need to solve. There are risks associated with this (and apologies in advance to both reader and Maslow for the abuse of the analogy):

- We create other problems by using the wrong approach — maybe the actual problem was a loose screw, and by using our hammer all you’ve got now is a damaged hammer and a broken screw.

- We artificially (pun intended) inflate the priority of problems that can be solved by AI tools, diverting our gaze from higher priority problems — by looking for the nails we overlook screws that are causing more of an issue.

- Using them inappropriately could lead to scepticism and that creates a barrier to future adoption when the right problems finally emerge — we disregard the hammer because we’ve decided they’re useless…and then we find the nails.

More seriously, I worry that in our haste to deploy AI and ‘proofs of concept,’ we risk underestimating the ethical concerns that emerge with AI. This approach could be used as a subconscious excuse to ‘just get going’ and so bake an unknown and potentially unacceptable level of risk that will discriminate and have other unintended consequences.

AI and public sector

Not to be doom and gloom, I actually believe public sector organisations are in a great position to use AI. When we work on these projects, we don’t start with the solution. We look at:

- aligning what the organisation wants to achieve, how we’ll measure success and how much we’re willing to invest to achieve this.

- Understanding users, their goals and motivations and the things that stand in the way of them achieving the outcomes that will add up to achieving the organisational goal.

- Defining constraints (e.g cost, legislation, security, reputation).

When we truly understand these, AI tools are an amazing set of capabilities to use as we co-design and iterate through to potentially viable, feasible and desirable solutions.

This doesn’t need to be a hugely time consuming endeavour, as we’ve seen with our Transformation Day approach. We can quickly gain alignment on desired outcomes, the highest priority problems to solve and potential approaches to solutions.

At Zaizi we’re looking at the transformative potential of AI. They present entirely new opportunities to try things we’ve never done and in ways we’ve never thought of.

But progress is not just about using the technology itself. It’s about our ability to understand the problem we’re solving and whether AI genuinely offers the solution to that problem.

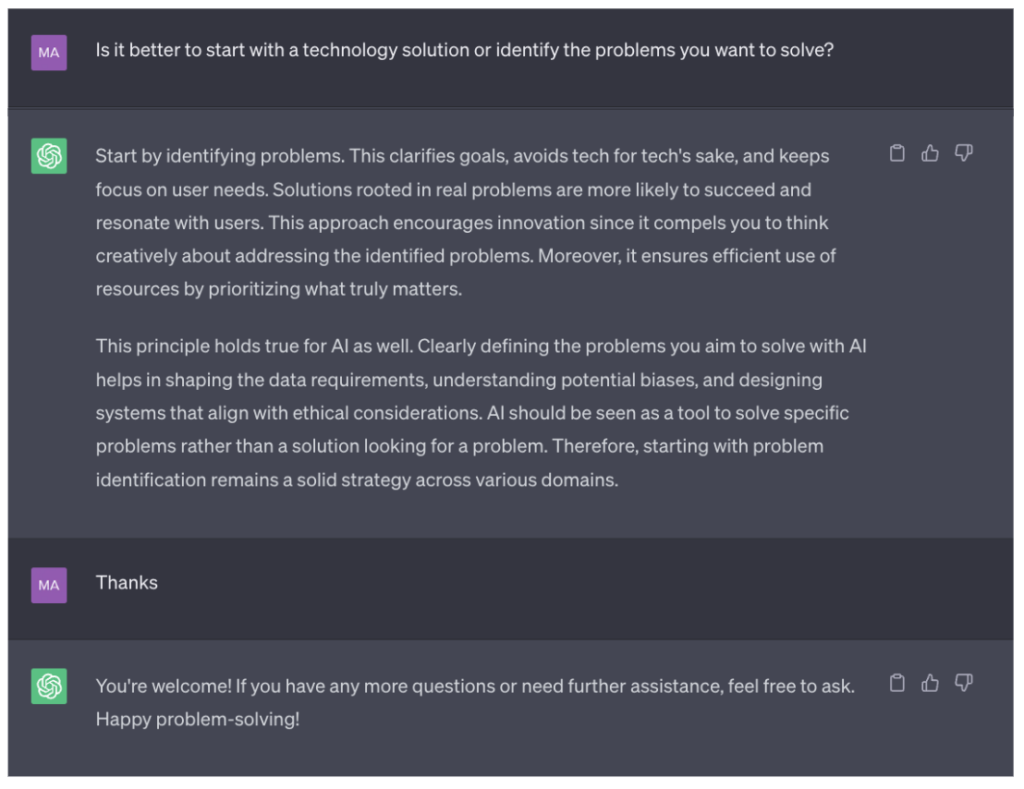

And ChatGPT agrees (so it must be right):

Latest content

-

New report shows how the Labour government can avoid the policy-delivery gap

-

Digital-first governance: Reflections on the first week of a Labour government

-

Why digital deliverability must be a key test for policy post-election

-

Bringing an SME perspective to government’s Code of Practice for Software Vendors

-

How to design thoughtful and secure cross domain workflows

-

What is a cross domain workflow and why does it matter?